Load Balancing

What is Load Balancing?

Load balancing distributes network traffic to multiple servers in a resource pool supporting applications. The purpose of this is to reduce the workload of each server, improve the availability of applications, and optimize the performance and reliability of the system. Today's Internet applications often have to process millions or even tens of millions of user data, and it is difficult for a single server to ensure that the data is transmitted to these users quickly and reliably. At this time, the load balancer can act as a coordinator to reasonably distribute the big data traffic to each resource server.

What Problems Does Load Balancing Solve?

Imagine you shop in a supermarket on the weekend, with only one open checkout window. The shopping demand of the crowd on weekends is large, so the checkout queue is very long, and you need to wait a long time before you can start to check out. In addition, the cashier's energy and the checkout machine's life are limited. A single checkout window may also fail due to busy business, resulting in delayed checkout time. However, under normal circumstances, supermarkets have many checkout windows so that the checkout demand can be automatically distributed, greatly reducing queue congestion, reducing the workload of a single cashier, and ensuring smooth business.

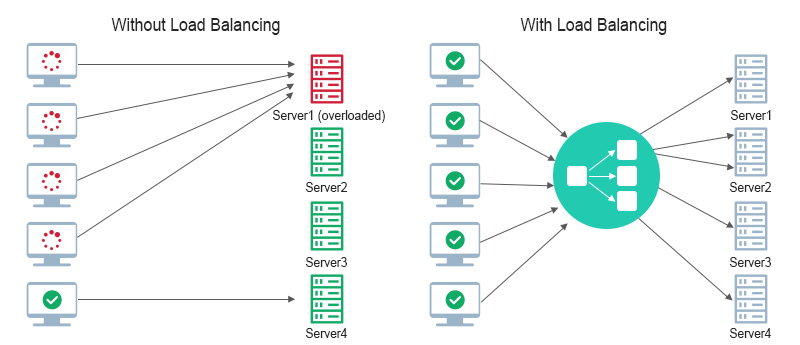

The core problem that load balancing solves is similar to the above scenario: the computing and storage capabilities of a single server and node are limited. If a computer receives many application requests from high-density users, network problems caused by excessive loads can easily occur if only one server and node forwards the traffic. However, by distributing these requests to multiple resource servers through load balancing, the traffic forwarding task can be shared, thus ensuring the forwarding efficiency and stability of the system.

How Load Balancing Work?

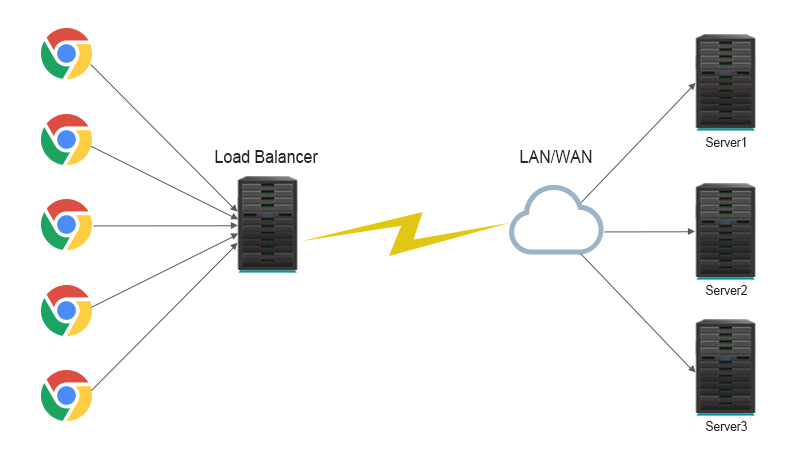

Load balancing efficiently distributes incoming network traffic across multiple servers to optimize performance and ensure reliability. It can be implemented using hardware appliances, software applications, or cloud services.

When a client sends a request, a load balancer determines the best server to handle that request based on a predefined algorithm. These algorithms can be static, where traffic is distributed according to a fixed schedule, or dynamic, where the load balancer considers real-time factors such as server health, workload, and capacity. By routing traffic to the most appropriate servers, load balancing prevents any single server from being overwhelmed and ensures that applications remain responsive even during traffic spikes.

Load balancers can optimize resource usage by activating additional servers if demand increases or deactivating them when traffic decreases. This process helps maintain consistent performance, high availability, and robust application security.

What are the Benefits of Load Balancing?

Load balancing enhances application availability, scalability, security, and performance by distributing network traffic across multiple servers.

Availability

Load balancers automatically detect server issues or maintenance needs and redirect traffic to healthy servers, ensuring continuous application availability and minimizing downtime.

Scalability

By intelligently distributing traffic, load balancers prevent any single server from becoming overwhelmed. They allow applications to scale quickly by adding more servers during traffic spikes, ensuring that large amounts of traffic are handled smoothly.

Security

Load balancers improve security by distributing traffic across multiple servers, reducing the impact of attacks like DDoS. They can also integrate security features like SSL encryption and traffic monitoring to protect against threats.

Performance

Load balancers optimize performance by directing requests to the most appropriate server, reducing latency, and ensuring consistent, high-speed application responses even during peak demand.

What are the Types of Load Balancing?

Load balancing comes in many forms, each designed to meet different network needs and optimize traffic distribution.

Hardware load balancers

These are physical devices equipped with specialized proprietary software to manage large volumes of application traffic. Traditionally, they are sold as standalone devices, often deployed in pairs for redundancy. Hardware load balancers are ideal for environments that require high performance and reliability, as they can handle large loads with built-in virtualization capabilities.

Software load balancers

Software load balancers run in virtual machines or cloud environments, providing flexibility and scalability. They are often part of an application delivery controller (ADC) and provide additional features such as caching, compression, and traffic shaping. These load balancers can dynamically increase or decrease resources based on fluctuating traffic, making them ideal for modern cloud-based applications.

Network load balancers

These focus on optimizing traffic based on network-level data, such as IP addresses and protocols, ensuring low latency and high throughput across the network.

Global Server Load Balancer (GSLB)

These load balancers are designed to distribute traffic across servers in multiple geographic locations, ensuring application availability and disaster recovery by routing requests to the closest or most available servers. DNS-based load balancing is a key method used in GSLB, allowing requests to be directed based on factors such as geographic proximity or server availability, ensuring seamless access even in the event of a server failure.