How Optical Transceivers May Evolve in the AI Era?

Since the rapid growth of ChatGPT by January 2023, the software application developed by OpenAI has won unprecedented attention and favor from users worldwide. The release of ChatGPT also stimulated the development of competing products, forming a pandemic in which global companies compete to deploy artificial intelligence.

Nvidia reported the sales growth of Artificial Intelligence (AI) hardware, such as fiber optic interconnects. Google plans to promote investment in AI server clusters. Other cloud companies, such as Amazon and Microsoft, can lead the development of new AI applications. The market has seen that AI systems urgently need optical transceivers to provide ultra-faster and higher-bandwidth data transmission between computing servers than ever before. This article is a market insight into how transceivers may evolve in the AI-driven era.

What Does an Optical Transceiver Have to Do with Artificial Intelligence?

Chatgpt has won one hundred million monthly active users within two months after its launch on November 30, 2022, making it the fastest-growing consumer software application in history. Daily individual users who visit and search Chatgpt are about 13 million, which is still growing. The operation of ChatGPT must be supported by powerful cloud computing resources to meet the massive user requirements. Otherwise, incidents like ChatGPT’s downtime due to a surge in traffic on the evening of February 7 will happen frequently.

Generative AI is trained under an existing dataset, which you may notice in the prompt field when asking Chatgpt questions: my knowledge was last updated in January 2022. The dataset comprises massive parameters for robust computing and machine learning ability, distributed in tens of thousands of controllers. AI is the primary factor driving demands for data centers, and data centers require transceivers to support the link data transmission. The current and future data centers must add a private AI unit to process massive datasets and conduct complex computing in real time.

Architecture Reshaping on Training and Prediction Side - A Shif to CLOS Networking: Fat-Tree, Spine-Leaf and What's Next?

The widespread application of AI and the resulting greater broadband demand for network terminals and computing hardware such as CPU/GPU may usher in future changes in the network architecture of AI data centers.

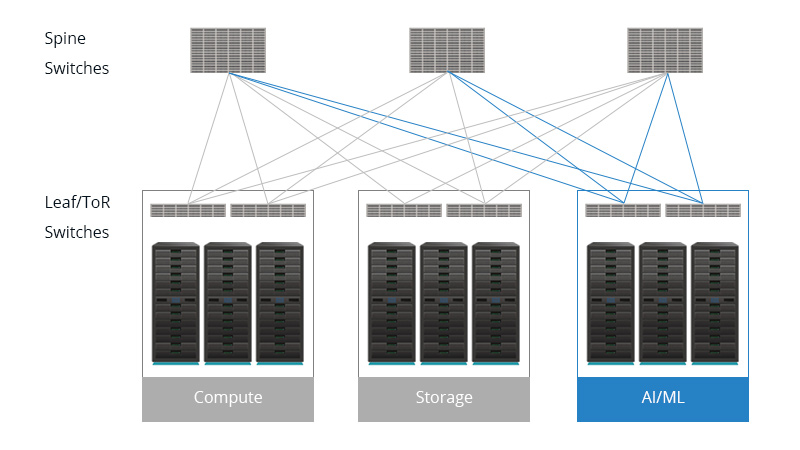

Generative AI platform adopts cloud-side training and terminal-side prediction methods. The demand for transceiver modules for AI training is strongly related to GPU shipments. In contrast, the demand for modules on the AI prediction side is strongly associated with data traffic required from the end users. Fat-tree architecture features non-congestion, so it might dominate the Cloud training side network to meet high-performance network requirements. As the prediction inference side faces the end users, the potential surge in user quantities driven by hot video or image AI applications requires the computational power to be even higher than the training end. Therefore, the prediction side prefers the spine-leaf architecture to handle the increased data load propelled by AI applications. The reshaping of AI data center architecture is expected to become another enabler of the increase in optical transceivers and network switches.

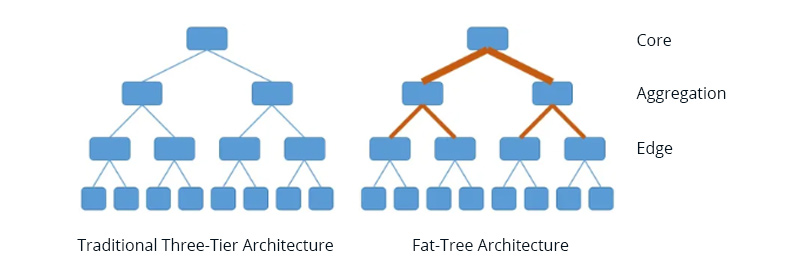

Traditional three-tier Core-Aggregation-Access architecture is based on a North-South transmission mode, which found its limitation when the surge in east-to-west traffic began to dominate in data centers. CLOS networking has become essential for data centers to build a non-blocking and multistage switching network.

The Fat-Tree architecture marks the CLOS network model entering the data center field and sees its success in Nvidia data centers. Fat-Tree deploys a considerable number of the same low-performance cheap switches to construct a large-scale network with no congestion. However, the Fat Tree also has its drawbacks. For instance, the expansion scale is limited by the port density of the core switches, which is not conducive to the sustainable development of data centers.

Two-tier spine-leaf architecture, another CLOS network model, found its momentum in data center connections. Spine-leaf architecture is a flat structure suitable for east-west traffic emergence. The Spine switch is similar to a core switch, and the Leaf switches are directly connected to computers. All switches are bidirectional for data input and output. A spine-leaf network has the advantage of flat design, easy expansion, predictable latency, high security, and high bandwidth utilization. However, spine-leaf switches require switches in all link points to be L3 switches, which cost much more than the traditional three-tier architecture requiring the core switch to be layer 3. This is why spine-leaf networking has become popular in data centers in the last few years until the cost of switching devices began to drop.

How will Optical Modules Affect Technological Change in the AI Era?

Optical transceivers are expected to drive the next-generation technology revolution as the core component of data center interconnection and internal interconnection.

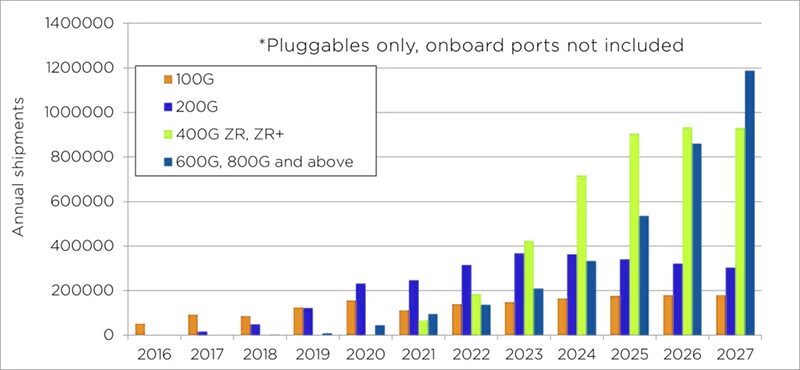

Since 400G is becoming the dominant standard in data center interconnects to handle massive volumes of data traffic between servers and storage, 4x 100G and 8x 100G breakout solution products have proved their popularity. However, large AI models like ChatGPT have posed higher demands on data traffic within and outside data centers, with the upgrade from 400G to 800G becoming a new trend. According to LightCounting forecast data, shipment of DWDM ports with higher speeds, 600G, 800G, and above, is expected to increase in 2024 and the next few years.

Hyperscale data center operators look forward to adopting next-generation optics to enable rapid and massive data flow between servers and storage devices. This trend also addresses the demand for emerging technologies such as the Internet of Things (IoT), Artificial Intelligence (AI), and Machine Learning (ML).

Source: LightCounting Optical Communications Market Forecast, April 2022.

We can expect that optical modules will play a vital role in the following fields in the era of artificial intelligence:

• Developing better AI systems: AI systems should rely upon optical transceivers to achieve ultra-fast and reliable data transmission.

• DCI and Supercomputers: Interconnecting data centers and supercomputers that host AI systems require high speed and high bandwidth for massive data analysis and computing.

• Motivating the development of AI-based Edge-computing: IoT devices such as sensors and cameras need the capability to process data quickly with minimized latency.

What Issues Do Future Transceivers Need to Solve in the AI Era?

Power Consumption

Power consumption is essential as there are thousands of transceivers in a large data center or even hundreds of thousands in a hyperscale data center. As the speed of optical modules evolves upward, power consumption also increases. Looking back on the transition from 1G to 800G, we can confirm that point: 1W for the 1G SFP module, 1.5W for the 10G SFP+ module, 3.5W for the 40G QSFP+ module, 4W for the 100G QSFP28 module, 12W for the 400G QSFP-DD module and 17W for the 800G QSFP-DD800 transceiver.

Take the Cisco Nexus 9232E 32-port QSFP-DD800 800G Switch as an example. If it is fully loaded with 32 pcs 800G QSFP-DD800 optical transceivers, the overall power consumption will be 544W = 17W x 32. In actual transmission, transceivers are used in pairs, which will drive the power consumption of the optical modules at both ends of the link to reach more than kilowatts. It is expensive for such a power consumption value just for the optical modules.

Note: The power consumption value was taken from the Cisco transceiver module datasheet

Cost-Effectiveness

Future transceivers will be widely adopted once they become cost-effective. An example of this point is that 40GE was widely used until 100GE became cost-effective. One approach is to double the data rate in each lane while not increasing the baud rate, which has been conducted successfully in PAM4 modulation (Pulse Amplitude Modulation 4-level) with FEC (Forward Error Correction) and equalization to address PAM4's high sensitivity to noise. 100G PAM4 is now mature for 100GE or 400GE applications. The ramp-up to 800G puts pressure on the availability of 25.6 Tbps routers and switches with 100G PAM4 interfaces. The newly developed 200G PAM4 solution in the 800G QSFP-DD and 800G OSFP optics makes 800G more affordable.

Silicon Photonics may be another critical technology to lower the costs of future optics, especially for dense transceivers with eight or more lanes and coherent optical transceivers powered by complex optical fiber capability. It is to integrate photonic components and transceiver functionality into a silicon substrate. Further, future transceivers are expected to be packaged with switch ASIC, which means independent optical transceivers may not be required to be plugged into switch panels.

Smartness

The future networks dominated by AI-driven applications will propel the demand for intelligent optical networking devices and components. AI-based networks may comprise an immeasurable quantity of devices, software, and complex technologies, which will become a disaster unless automated network systems are achieved. The self-managed and automatic AI-controlled system will relay smart optics to forward an overall operating condition of itself and its connected fibers, which is parament for anticipating failures before they happen and ensuring the smooth operation of the AI computing systems.

As a cost-effective and easy solution for high-speed and long-haul transmission networks, the coherent transceiver is the dominant standard in Metro networks and will carry higher responsibilities and expectations in today's and future AI-intensive applications. FEC achieved by DSP (the core component of coherent technology) enables the coherent link to be more anti-noise than direct detection. A future smartly programmed transceiver is expected to control its FEC to adapt to the changing networking applications.

Summary

Artificial intelligence (AI) is gaining momentum and is still being developed to optimize its limitations, such as generating nonsense answers. Future-proof, more powerful optical transceivers are critical to support ultra-fast data transmission as data center demands increase driven by AI. The shift from 400G to 800G addresses growing demands: 2024 may become the year of 800G for data center adoption. The reshaping of data center architecture on the training and prediction side is expected to be another enabler and driver for transceiver demand. Besides always pursuing higher speed, challenges include rising power consumption, cost-effectiveness, and the need for smart, automated systems to support AI-driven networks. Learning the previous successful technologies and making advancements in PAM4 modulation, forward error correction (FEC), and Silicon Photonics may contribute to affordability and efficiency in meeting these demands.