Quality of Service in Networking: QoS Meaning Explained

In the complex networking realm, the term "Quality of Service" (QoS) encapsulates the essence of an optimal user experience. This introduction peels back the layers of QoS and delves into its importance in resource management and network responsiveness. We'll unravel the meaning of QoS in networking, explore basic models, and highlight the central role it plays in various applications. Join us for a concise exploration of Quality of Service, which forms the fabric of efficient and reliable network operations.

What is Qos in Networking?

Qos Definition

QoS, or Quality of Service, refers to a set of techniques and mechanisms implemented in computer networks to manage and improve the performance and reliability of data transmission. It involves prioritizing and controlling the flow of network traffic based on criteria such as bandwidth, latency, and packet loss. By ensuring that critical applications and services receive preferential treatment over less time-sensitive ones, QoS aims to optimize overall network efficiency and provide a more consistent and reliable user experience.

The Working Principle of Quality of Service in Computer Networks

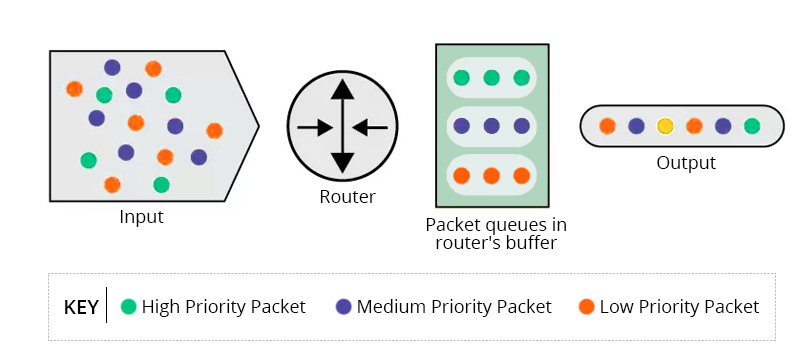

Quality of Service works by categorizing packets to distinguish different types of service, and then instructing routers to create different virtual queues for individual applications based on their priority. This process ensures that essential applications or high-priority Web sites receive reserved bandwidth. Quality of Service technologies facilitate the allocation of capacity and processing to specific network traffic flows, allowing network administrators to sequence the processing of packets and allocate appropriate bandwidth to each application or traffic flow. In essence, QoS improves network performance by prioritizing critical applications and optimizing resource allocation.

Three Fundamental Models of QoS in Computer Networks

To explore the intricacies of Quality of Service (QoS) in computer networks, we will examine three fundamental models that shape the delivery and management of network services. These models encapsulate different approaches, each of which addresses specific requirements and scenarios. Let us navigate through the QoS landscape by examining the Best Effort Model, the Integrated Service Model (IntServ), and the Differentiated Service Model (DiffServ).

Best-Effort Model

This model is a basic approach to quality of service (QoS) in computer networks where no specific service levels are guaranteed. In this model, data packets are treated equally, and the network aims to deliver them as efficiently as possible without providing any prioritization. This model is appropriate for applications that can tolerate variable delays and packet loss, such as Web browsing and e-mail services.

Integrated Service Model (IntServ)

The Integrated Service Model (IntServ) for Quality of Service (QoS) is a meticulous approach to ensuring end-to-end quality guarantees for individual network flows. IntServ, which is particularly important for applications with stringent quality requirements such as real-time communications and video conferencing, uses the Resource Reservation Protocol (RSVP) to reserve resources along the entire network path.

Using RSVP signaling, IntServ-enabled devices communicate specific QoS parameters such as bandwidth, latency, and jitter to network routers. These routers, in turn, allocate dedicated resources and establish a predictable path for the identified flow. This meticulous resource reservation process is key to meeting the stringent requirements of applications that prioritize a consistent, high-quality user experience.

While IntServ excels at providing explicit QoS for critical flows, its scalability is challenged in large, high-flow networks. As a result, it is better suited for environments with manageable traffic demands, where ensuring precise QoS parameters is paramount. In summary, IntServ, with its targeted resource reservation mechanism, addresses applications that require a stringent and guaranteed quality of network service.

Differentiated Service Model (DiffServ)

The Differentiated Service Model (DiffServ) is a Quality of Service (QoS) paradigm that streamlines network traffic by classifying and prioritizing data based on service levels. Unlike the Integrated Service Model (IntServ), DiffServ employs Differentiated Services Code Points (DSCP) to mark packets at the network edge. As packets traverse routers, these markings guide forwarding decisions, allowing for scalable and flexible traffic prioritization. DiffServ's per-hop behavior model accommodates diverse network demands without the need for end-to-end reservations, making it well-suited for large-scale networks with dynamic traffic patterns.

In essence, DiffServ offers a practical and scalable approach to QoS, ensuring efficient traffic management by prioritizing packets according to assigned Differentiated Services Code Points.

Applications of QoS

In this section, we will explore specific applications where QoS is crucial.

Network Protocols and Management Protocols

Quality of Service (QoS) proves instrumental in optimizing the performance of network protocols such as OSPF (Open Shortest Path First) and management protocols like Telnet. By implementing QoS, OSPF benefits from prioritized packet handling, minimizing latency and packet loss for efficient routing information transmission. For a detailed look at OSPF, read this article: OSPF vs. BGP: Choosing the Right Routing Protocol. Simultaneously, QoS ensures responsive and secure Telnet sessions by prioritizing command and control traffic, thereby enhancing the overall effectiveness and reliability of both network and management protocols.

Real-time Applications Like Video Conferencing and VoIP

The real-time nature of applications like video conferencing and Voice over Internet Protocol (VoIP) imposes stringent requirements on network performance. QoS becomes indispensable in this context, as it allows for the prioritization of real-time traffic flows, ensuring low latency, minimal jitter, and high reliability. By prioritizing these critical communication channels, QoS helps mitigate disruptions, guaranteeing a smooth and uninterrupted user experience in video conferencing and VoIP services.

High Data Volume Services

High-volume services benefit greatly from Quality of Service (QoS) implementations. For FTP, QoS enables optimal bandwidth management, minimizes packet loss, and increases data throughput for seamless transfer of large files. For database backups, QoS ensures the prioritized and efficient transfer of large data sets, helping to complete backups on time and maintain data integrity. Similarly, for file dumping, Quality of Service regulates the flow of data, prevents congestion, and optimizes bandwidth utilization, ensuring the reliable and efficient transfer of large volumes of data.

Streaming Media Services

Streaming media services, encompassing audio and video streaming platforms, heavily rely on QoS to deliver a seamless streaming experience. QoS prioritizes streaming traffic, minimizes buffering delays, and ensures consistent video and audio quality. This is especially critical in today's digital landscape, where users expect high-quality multimedia content without interruptions.

Benefits of Quality of Service (QoS)

Improved Resource Management

One of the primary benefits of QoS is the improved management of network resources. By implementing Quality of Service mechanisms, such as traffic prioritization and bandwidth allocation, organizations can ensure that critical applications receive the necessary resources, preventing congestion and optimizing overall network efficiency. This enhanced resource management leads to a more stable and responsive network infrastructure, catering to the diverse needs of different applications and services.

Enhanced User Experience

QoS plays a pivotal role in enhancing the user experience across various applications and services. By prioritizing critical traffic, such as real-time communication and streaming media, QoS ensures that users experience minimal disruptions, reduced latency, and consistent high-quality performance. This, in turn, contributes to increased user satisfaction and fosters a positive perception of the provided services.

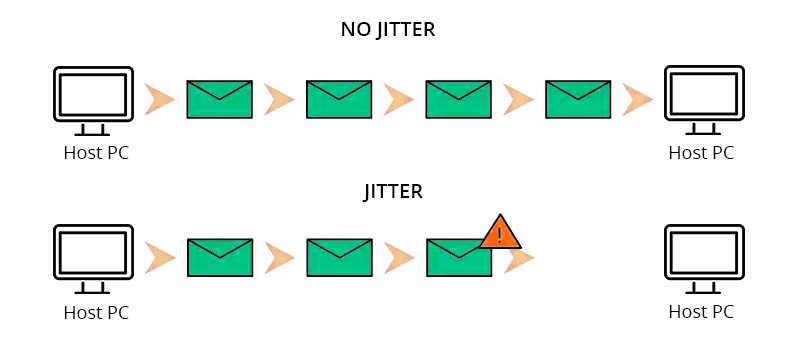

Reduced Latency and Jitter

The reduction of latency and jitter is a significant benefit afforded by QoS implementation. In scenarios where real-time communication and multimedia services are crucial, Quality of Service mechanisms prioritize the timely delivery of data packets, minimizing delays and ensuring smooth, jitter-free experiences. This not only improves the performance of applications like video conferencing and VoIP but also positively impacts overall network responsiveness.

Unrestricted Application Priority

QoS grants organizations the flexibility to assign priorities to different applications based on their specific requirements. Critical applications, such as voice communication or mission-critical data transfer, can be given higher priority, ensuring that they receive the necessary network resources without interference from less time-sensitive traffic. This unrestricted application priority empowers organizations to tailor their network management strategies to align with their specific business needs and priorities. Explore QSFPTEK's budget-friendly 2.5 Gbps Ethernet switch featuring Quality of Service support, perfect for diverse scenarios.

Conclusion

In conclusion, Quality of Service (QoS) stands as a cornerstone in network optimization, ensuring improved resource management, an enhanced user experience, and minimized latency. Its diverse applications, from managing network protocols to facilitating high-volume services and streaming media, showcase its versatility. By granting unrestricted application priority, QoS empowers organizations to tailor their network strategies, ultimately contributing to a responsive and efficient network infrastructure.