Bandwidth vs Throughput vs Latency, What’s the Difference in Computer Networking?

Network bandwidth, throughput, and latency are three key measures of network performance, and they can be optimized through efficient switches. This paper will look in detail at the definitions of bandwidth, throughput, latency, their relationships, and the critical role of network switches in optimization.

What is Network Bandwidth?

How to Define Bandwidth in Networking?

Network bandwidth is the maximum data capacity that can be transmitted and received by a network in a given period. It is usually measured in terms of the number of bits transmitted per second (e.g., bps, Kbps, Mbps, Gbps). It reflects the transmission capacity of the network; a higher bandwidth means the network can transmit more data in less time for better performance.

As an analogy, network bandwidth is like the diameter of a water pipe. Thicker pipes can carry more water at once. Similarly, the larger the network bandwidth, the more data can be transmitted simultaneously. A network with a large bandwidth is like a thick water pipe, allowing large amounts of data to pass through quickly and improving overall network performance.

Why is Bandwidth Important?

Network bandwidth determines the amount of data transmitted per unit of time, directly affecting data transmission speed and user experience. High bandwidth ensures a stable and fast connection when multiple devices use the network simultaneously, supporting high-demand applications such as high-definition video streaming, cloud services, and video conferencing. In addition, high bandwidth reduces latency and lag, improves the quality of real-time communications and online collaboration, and meets the modern world's need for efficient and reliable digital communications.

What is Computer Latency?

The Latency in Network Meaning

Network latency is the time it takes for data to be transmitted from one network node to another. It reflects the responsiveness of the network. The lower the latency, the faster the data is transferred and the better the user experience.

An analogy can be made that network latency is like the time difference in sending a message. If you are communicating with a friend in a different room, the time that elapses between when you say a sentence and when your friend hears it is latency. If network latency is high, it can lead to problems such as slower loading of web pages, lag in video calls, and lag in online games. Therefore, low latency is crucial to ensure real-time and smoothness of web applications.

What Factors Cause Network Latency?

Physical Distance: The greater the physical distance, the greater the latency when data is transmitted between network nodes. For example, the latency of transferring data from one country to another will be higher than data transmitted in the same city.

Network Devices: Network devices such as firewalls, switches, and routers introduce processing time during data transmission. The higher the performance of the device, the faster the data is processed and the lower the latency.

Network Congestion: When the amount of data traffic traveling through a network exceeds its carrying capacity, congestion results, and packets may have to be queued for transmission, thus increasing latency.

Transmission Medium: The data transmission medium (e.g., fiber optics, cable, wireless signals, etc.) affects latency. Fiber optic transmission is fast and has low latency, while wireless signals may have higher latency due to interference and distance.

What is Throughput in Networking?

Throughput: Networking Definition

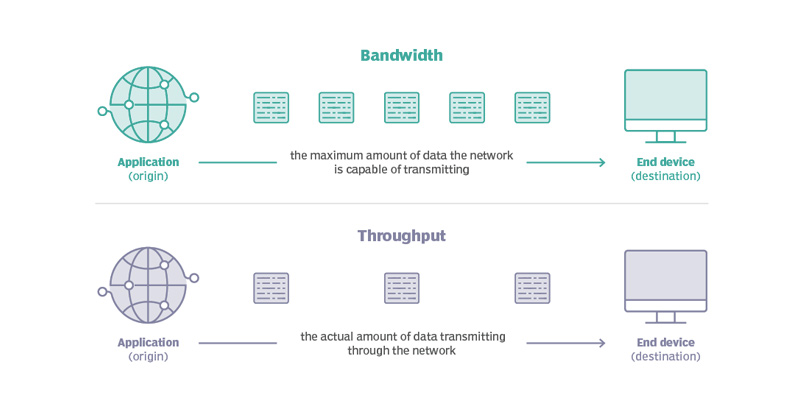

Network throughput is the amount of data actually transmitted and received in a given period. As mentioned above, network bandwidth is like the diameter of a water pipe, determining the maximum amount of water that can flow through the pipe under ideal conditions. Network throughput, then, is the amount of water that actually flows through the pipe.

What Factors Cause Network Throughput?

Bandwidth: Bandwidth is the primary factor that affects throughput. Higher bandwidth allows more data can be sent in less time, increasing throughput.

Network Latency: High latency causes packets to spend more time in the network, which reduces throughput. The impact of latency on throughput is especially noticeable when frequent acknowledgments and responses are required.

Network Congestion: Network congestion causes packets to queue during transmission, increasing latency and packet loss rates, which reduces throughput.

Packet Loss: A high packet loss rate causes more packets to be retransmitted, which uses up bandwidth resources and reduces throughput.

Network device performance: The processing power of routers, switches, and other network devices can also affect throughput. Lower-performing devices can become bottlenecks, limiting the speed at which data can be transmitted.

The Importance of High Throughput

High throughput means that more data can be transferred in the same amount of time, significantly improving data transfer efficiency and ensuring that applications are completed more quickly. In addition, good throughput performance enhances the user experience, enabling faster web page loading, smoother video playback, and less latency in online games. For enterprises, network throughput directly affects the continuity and stability of business operations. High throughput ensures the stable operation of internal systems and applications, reducing downtime and business interruptions caused by network performance issues.

Latency vs Bandwidth vs Throughput: Understanding the Differences

Latency vs Throughput

Latency measures the transmission speed, while throughput measures the amount of data transmitted.

High latency reduces throughput. As packets spend more time in the network, the overall data transfer rate decreases. For example, in a high latency network, even with sufficient bandwidth, the actual throughput is limited due to the delay of packets during transmission. Therefore, optimizing throughput requires reducing latency to ensure data can be transmitted quickly and efficiently.

Bandwidth vs Throughput

Bandwidth provides the potential for transferring data, while throughput reflects the network's performance under actual conditions.

Bandwidth sets the maximum capacity of the network to transmit data, but actual throughput is also affected by network conditions such as latency and congestion. Ideally, throughput should be close to bandwidth, but packet loss and network congestion can cause actual throughput to fall below the maximum bandwidth. Therefore, improving throughput relies on increasing bandwidth and optimizing network conditions to reduce obstacles in data transmission.

Bandwidth vs Latency

Bandwidth is concerned with the amount of data transferred, while latency is concerned with the transfer speed. High bandwidth does not necessarily mean low latency and vice versa. For example, a network with high bandwidth may have high latency because of the processing and queuing time of packets during transmission. Conversely, low latency contributes to higher data transfer speeds, but the overall amount of data transferred remains limited if bandwidth is insufficient. Therefore, optimizing network performance requires balancing bandwidth and latency to ensure sufficient transmission capacity and fast data transfer.

How Can You Increase Your Network Speed By Improving Latency, Throughput and Bandwidth?

Choosing a Switch with Higher Bandwidth Capacity

Choosing a switch with a higher bandwidth capacity can significantly improve network performance. Higher bandwidth capacity allows more data to be transmitted per unit of time, thereby increasing overall network throughput. Look for switches with high-speed ports to handle large data loads.

Consider low-latency specifications

Low-latency switches reduce the time it takes for packets to travel through the network, improving the performance of real-time applications. When choosing a switch, focus on its latency specifications and select a model with lower latency to reduce the impact of latency.

Choose a Managed Switch

Managed switches provide greater control and more configuration options to optimize network performance. With a managed switch, you can perform traffic management, VLAN settings, and QoS (Quality of Service) configuration to control traffic, reduce congestion, and improve network efficiency.

Evaluate Port Density and Speed

When selecting a switch, evaluate its port density and speed to meet current and future needs. Higher-end port density switches allow more devices to connect, increasing network scalability. High-speed ports, such as Gigabit and 10 Gigabit ports, on the other hand, provide faster data transfer rates and improve overall network performance.

Look for Network Redundancy Features

Network redundancy features can improve the reliability and stability of your network. Choosing a switch with redundant power supplies and link aggregation, features can keep your network up and running in the event of a device failure or connection interruption, reducing downtime and ensuring network continuity.

Conclusion

By exploring bandwidth, throughput and latency in detail, we understand the definitions of these key network performance metrics and their interrelationships. Bandwidth determines the maximum data transfer capacity, throughput reflects the amount of data transferred, and latency measures the response speed of data transfer. Optimizing network performance requires not only choosing a switch with high bandwidth and low latency, but also considering the managed switch's traffic control capabilities and port density, speed and redundancy features. By optimizing a combination of these factors, the network performance can be significantly improved.