Everything You Should Know About InfiniBand vs. Ethernet

In the world of connectivity, InfiniBand and Ethernet each have their own characteristics, making it difficult to say which is better. Each has evolved in different application scenarios, making them two indispensable technologies for networking.

InfiniBand and Ethernet: What Are They?

InfiniBand Networks

InfiniBand and Ethernet are very different in design: InfiniBand shines in supercomputer clusters with its high reliability, low latency and high bandwidth, making it the interconnect technology of choice for most servers, especially GPU servers. 10 Gbit/s data rate, InfiniBand allows each channel to transmit single data rate (SDR) signals at a base rate of 2.5 Gbit/s. Single channels can be expanded to 5 Gbit/s and 10 Gbit/s, resulting in data rates up to 40 Gbit/s with 4X cables and 120 Gbit/s with 12X cables, and InfiniBand's 2x (DDR) and 4x (QDR) data rate signals can be supported.

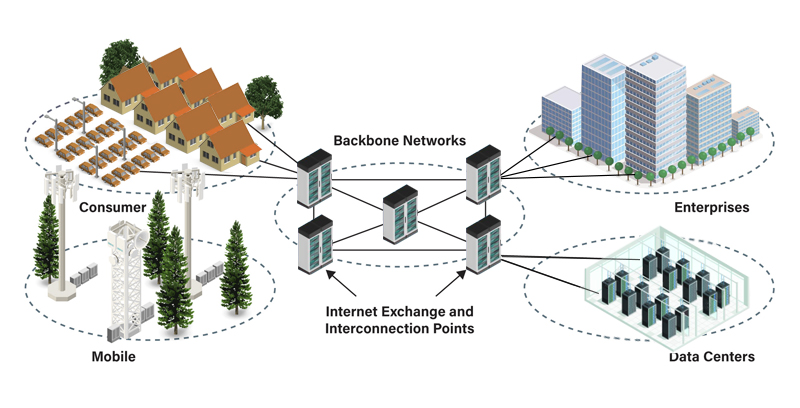

Ethernet

Since its release on 30 September 1980, Ethernet has become the most commonly used communication protocol in LANs. Unlike InfiniBand, Ethernet was designed with the goal of facilitating the flow of information between multiple systems. It primarily uses a distributed architecture and is based on compatibility. Traditional Ethernet is usually based on TCP/IP to build a network, which is nowadays evolving into RoCE (RDMA over Converged Ethernet). In general, Ethernet can be used to connect computers and other devices on a LAN, for example, to establish a connection between a computer and a printer, or a printer scanner or the like to a LAN. Not only can wired networks be connected via fiber optics, but wireless connectivity is also possible via wireless technology. The main types of Ethernet include Fast Ethernet, Gigabit Ethernet, 10 Gigabit Ethernet, and Switched Ethernet.

Differences Between InfiniBand and Ethernet

In the high-performance computing scenario, InfiniBand was originally designed to solve the bottleneck of cluster data transmission and adapt to the needs of the times. Therefore, there are obvious differences between InfiniBand and Ethernet in terms of bandwidth, latency, network reliability, technology, and application scenarios.

Bandwidth

Since its inception, InfiniBand has been ahead of Ethernet. The main reason for this is that InfiniBand is mostly used for interconnecting servers in high-performance computing, whereas Ethernet focuses more on end-device connectivity with relatively low bandwidth requirements. As a result, Ethernet standards usually only consider how to achieve interoperability, while InfiniBand standards are more comprehensive, not only focusing on interoperability, but also focusing on how to reduce the burden on the CPU during high-speed data transmission, to ensure that the bandwidth is used efficiently, and take up as little CPU resources as possible.

When network traffic exceeds 10G, it would be a waste of resources if all packet encapsulation and de-encapsulation relied on CPU processing. It is like using expensive CPU resources to handle simple network transmission tasks, which is not cost-effective from a resource allocation perspective.

In order to cope with this problem, InfiniBand's first-generation SDR rate was 10Gbps, so it borrowed the idea of DMA technology and bypassed the CPU, which not only increased the bandwidth of data transmission, but also alleviated the pressure on the CPU by proposing RDMA technology, which solved this challenge perfectly. With RDMA, the CPU doesn't have to consume more resources to process network transactions because of the significant increase in bandwidth, thus not slowing down the overall high-performance computing. This is why InfiniBand has rapidly evolved from SDR's 10Gbps to today's HDR 200Gbps, 400Gbps, and even 800Gbps XDR.

Network Latency

InfiniBand and Ethernet are quite different in terms of latency. Ethernet switches use store-and-forward and MAC address table lookup, which results in relatively long processing times. On the contrary, InfiniBand switches only need a 16-bit LID to look up the forwarding path and use Cut-Through technology to reduce forwarding latency to less than 100ns, which is significantly faster.

Network Reliability

For high-performance computing, packet loss and retransmission can seriously affect the overall performance, so highly reliable network protocols are required. InfiniBand uses a class-of-service (CoS) mechanism implemented from Layer 1 through to Layer 4, so it is always top-down and hierarchical with static lossless packet forwarding. However, Ethernet lacks a scheduling-based traffic control mechanism for efficiently handling network congestion. As a result, Ethernet switches consume more cache resources in the event of high traffic and increase costs with energy consumption.

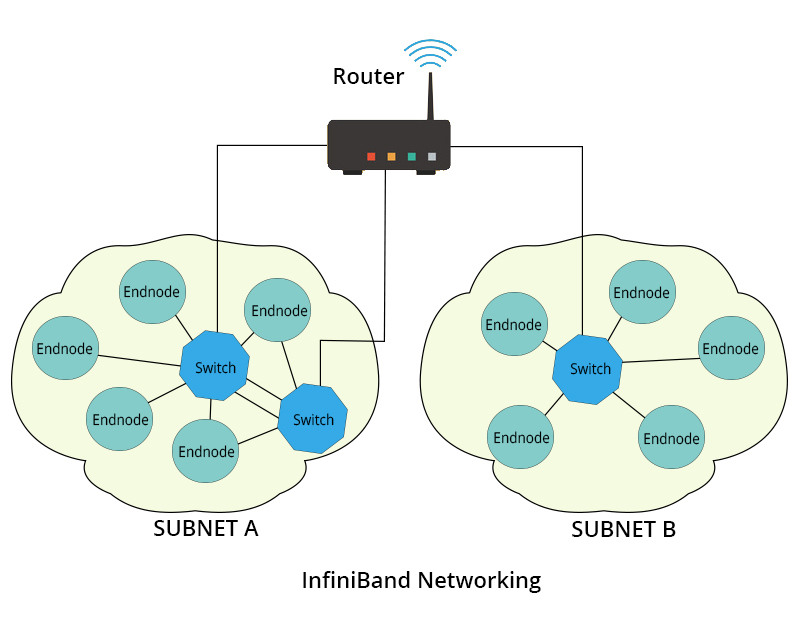

Networking Approach

From a networking perspective, InfiniBand is relatively simple to manage. The concept of SDN is included in its design, and each subnet manager in the network is responsible for configuring the IDs of network nodes and uniformly calculating forwarding paths. Ethernet networking, on the other hand, requires sending packets periodically to update MAC table entries and implementing the VLAN mechanism, which is relatively more complex.

The topologies of these two networks are also different; Ethernet supports a variety of topologies, including star-tree and other topologies. The design can be flexibly adapted to expand the network according to demand. InfiniBand, on the other hand, usually uses a dedicated topology (Fat Tree or Dragonfly, etc.) that is optimized for high bandwidth and low latency, but requires more design considerations for large-scale expansion.

Application Scenarios

InfiniBand is widely used in high-performance computing environments due to its high bandwidth, low latency and good support for parallel computing. Ethernet, on the other hand, is commonly used in enterprise networks, home networks, etc., and still occupies an important market due to its low cost and extensive standardization advantages. As the demand for computing power continues to increase, the use of InfiniBand in the world's top 500 supercomputing centers is particularly critical.

At the same time, it is necessary to take into account that the two network compatibility aspects are different. For Ethernet, almost all network devices support Ethernet connectivity. It is easy to use products from different equipment vendors for network applications. InfiniBand, on the other hand, although it has an advantage in terms of performance, it usually uses specialized equipment, which has a lot of limitations in terms of compatibility and flexibility.

QSFPTEK InfiniBand and Ethernet Optical Transceiver Introduction

QSFPTEK can provide 400G NDR InfiniBand Optical Module and 800G NDR InfiniBand Optical Module, The 800G OSFP PAM4 Optical Transceiver is widely used in data centres and 800G and 2x 400G applications. In terms of Ethernet modules, 1G to 800G Ethernet optical transceiver can be provided. In addition to regular modules, WDM transceivers and coherent transceivers are also available at many rates. Each QSFPTEK optical device is 100% tested in branded switch equipment to ensure perfect product operation.

Conclusion

InfiniBand and Ethernet are each suitable for different scenarios. InfiniBand can significantly increase the rate while reducing the CPU burden, resulting in higher network utilization, which is why it is one of the main solutions in HPC. In the future, even higher speed InfiniBand products are likely to emerge. If latency requirements between data centers are not high and flexibility and scalability are more important, Ethernet is certainly an option worth considering.